Why We Use Foundation Models at Brim

July 9, 2025

At Brim, we spend a lot of time thinking about how to get the most accurate, scalable, and shareable insights out of unstructured clinical data. That means choosing the right AI strategy when leveraging large language models: one that achieves quality output alongside long-term maintainability, flexibility, and cost.

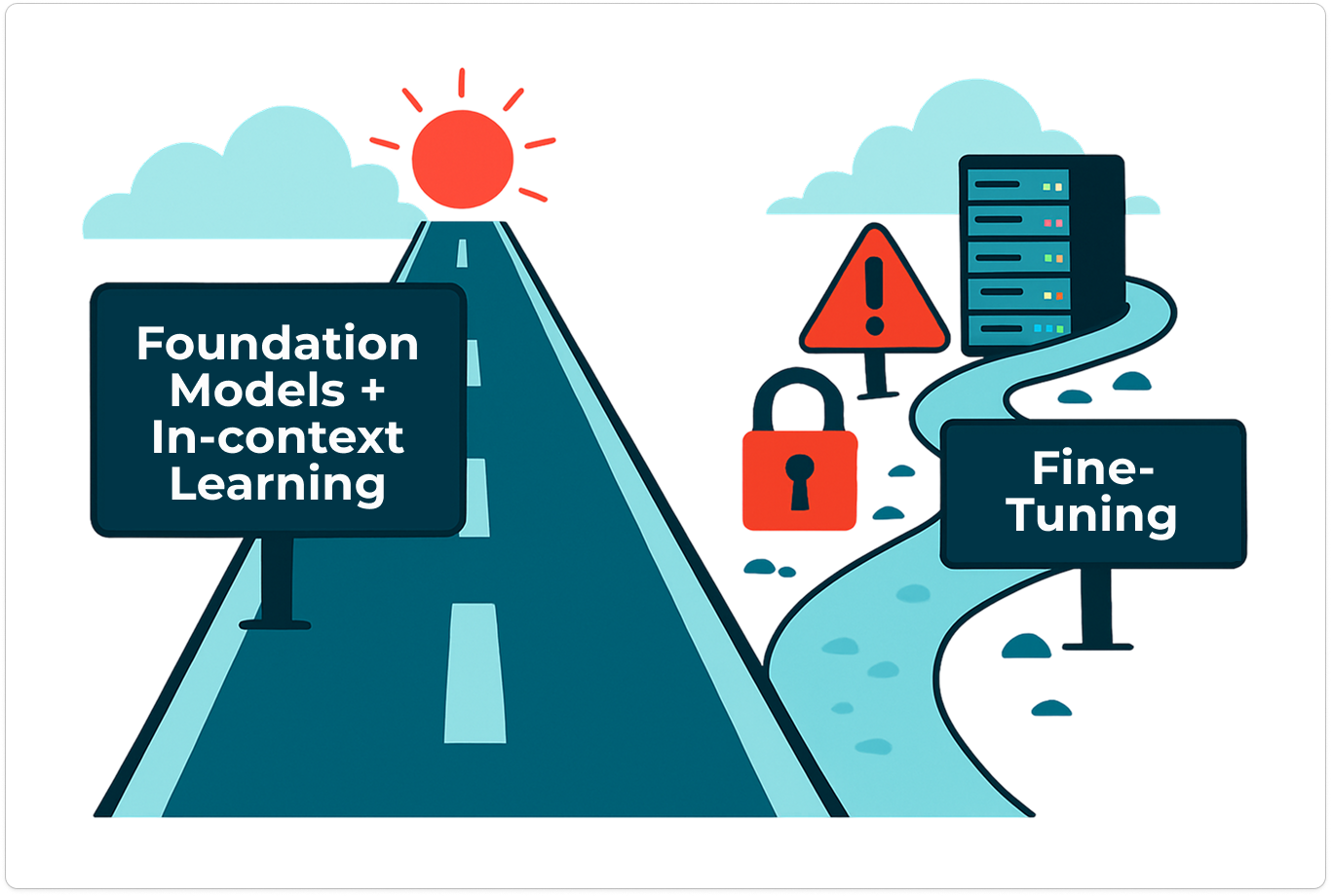

After years of experience in medical informatics and chart abstraction, we’ve landed on a clear preference: foundation models with in-context learning.

This article will lay out why we prefer this strategy for chart abstraction to building fine-tuned models.

Key Takeaways

- Foundation models are strong and scalable generalist models that are up-to-date and enable healthcare-grade security through existing agreements.

- Fine-tuning is expensive, not shareable, and hard to maintain.

- Brim combines foundation models with in-context learning and a hierarchy of variables to achieve accuracy, traceability and complex reasoning.

- Brim enables security and portability: project-specific variables keep learning secure inside a project, and variable owners can export variables to other sites for abstraction consistency.

Foundation Models: Strong, Shared, and Regularly Updated

Foundation models (like those from OpenAI, Google, AWS, or Meta) are powerful and scalable general-purpose models trained on massive corpora. They perform well across a wide range of clinical abstraction tasks, especially tasks that are moderately complex but don’t require multi-step reasoning.

We like foundation models because:

- They’re secure. Hospital A and Hospital B can use the same model, but enterprise BAA's ensure that their data is not shared. This supports Brim's commitment to top-notch data security.

- They’re the most up-to-date. Top foundation models are updated on a regular cadence, and "wrappers" benefit from those improvements without retraining.

- They’re strong generalists. For many clinical use cases, we don’t need a custom model; we just need a consistent way to guide a strong model toward the right output.

In addition, using foundation models for multiple purposes empowers healthcare organizations to see results from AI with lower upfront investment.

If foundation models are so great, how do we make sure they have the necessary knowledge to accurately perform chart abstraction? We believe that in-context learning with variables is an excellent way to achieve specialized knowledge while preserving the benefits of using foundation models.

In-Context Learning with a Hierarchy of Variables: The Best of Both Worlds

Rather than fine-tuning a model with PHI-laden examples (which can’t be shared or reused), Brim relies on combining a hierarchy of variables with in-context learning.

With Brim, you can define how a variable should be extracted from clinical notes, specifying semantics, constraints, and handling of ambiguous cases. That definition travels with the variable, letting you apply it across patients, projects, and even institutions, all without retraining a model.

You can then layer these variables, referencing other variables and text to achieve more complex reasoning. A research project might be a few levels of hierarchy, while clinical trial inclusion criteria and other complex tasks can have hundreds of variables in a dozen levels. This modular approach to data abstraction helps generalist models be more successful.

Brim approaches in-context learning by combining examples provided by the researcher in the instruction with automatic learning from feedback given on human-reviewed examples.

Combining a hierarchy of variables with in-context learning combines the security, up-to-date technology, and generalist capabilities of foundation models with other benefits, including:

- Multi-step reasoning. By defining a hierarchy of variables that reference each other, Brim allows complex reasoning to be achieved using a generalist model. Each variable also learns from human feedback to individually benefit from in-context learning.

- Traceability. Creating a hierarchy of variables allows tracing the reasoning at each step. In Brim's case, the reasoning and the raw text used to reach a conclusion is available for each variable value, making it easy to tweak prompts and improve the final result.

- Data owners control data sharing. Variable definitions contain the learning, not the model, which means the learning stays inside your project by default. Multi-site projects have the option to share variables for strong abstraction agreement across sites.

Why We Don’t Fine-Tune

Fine-tuning has its place. It can compress complex models, lower runtime costs, and improve performance on extremely specific tasks. But in clinical research workflows, it comes with serious drawbacks:

- It’s hard to share. If your fine-tuned model was trained on PHI, you can’t share or publish it. That limits generalizability and reproducibility.

- It’s expensive. Fine-tuning takes time, money, and machine learning operations (MLOps) infrastructure. And it often locks you into a setup that’s hard to maintain.

- It ages quickly. AI is moving incredibly quickly. A fine-tuned model from three months ago may now underperform compared to a new foundation model out of the box.

- It adds friction. Managing multiple fine-tuned endpoints for different abstraction tasks or institutions is a headache.

In short: fine-tuning improves accuracy for challenging tasks, but high-quality outputs are possible using strong foundation models with thoughtful prompts, a hierarchy of variables doing specific tasks, and in-context learning.

What This Means for Brim

Brim is built to help researchers extract structured insights from unstructured data quickly, reproducibly, and with minimal overhead.

By leveraging foundation models plus in-context learning, we:

- Avoid PHI in model training

- Keep projects adaptable and lightweight

- Take advantage of cutting-edge model improvements

- Let customers focus on research, not infrastructure

Want to see it in action? Book a demo today.